This post was initially published on the EA Forum on June 14th 2021.

Introduction

Research has produced, and is producing, a lot of value for society. Research also takes up a lot of resources: Global spending on R&D is almost US$ 1.7 trillion annually (approx 2 % of global GDP). This is an attempt to map challenges and inefficiencies in the research system. If we addressed these challenges and inefficiencies, research could produce a lot more value for the same amount of resources.

The main takeaways of this post are:

- There are a lot of different issues that cause waste of resources in the research system

- In this post, these issues are categorized as related to (1) choice of research questions, (2) the quality of research and (3) the use of the results produced

- Though solutions and reform initiatives are outside the scope of this post, there does seem to be a lot of room for improvement

The terms “value” and “impact” are used very broadly here: it could be lives saved, technological progress, or a better understanding of the universe. The question of what types of value or impact we should expect research to produce is a big one, and not the one I want to focus on right now. Instead, I will just assume that when we dedicate resources to research we are expecting some form of valuable outcome or impact. I will attempt to map up inefficiencies in how that is produced.

This post is a result of a collaboration that came out of the EA Global Reconnect conference. I have received invaluable support and feedback from many people in this group, and I want to especially thank David Janků, David Reinstein, Edo Arad, Georgios Kaklamanos and Sophie Schauman who have contributed hugely. Mistakes are my own.

- Overview

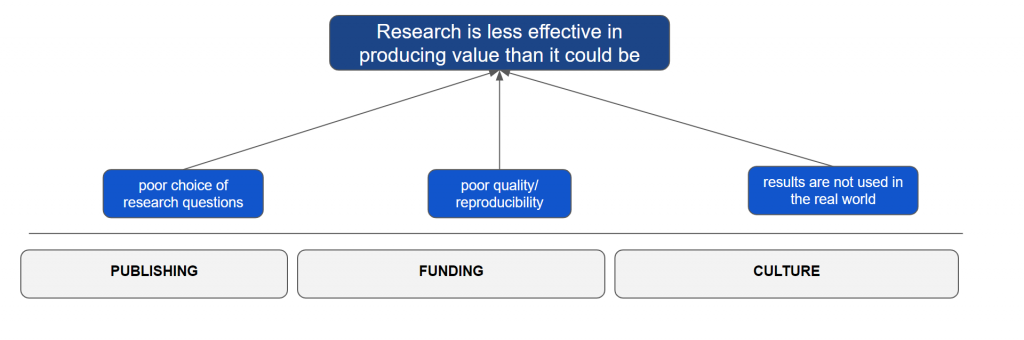

The causes of inefficiencies in the research system can be roughly categorized in three areas: (1) the choice and design of research questions can be flawed, (2) the research that is carried out can suffer from poor methodological quality and/or low reproducibility, and (3) even when research successfully leads to valuable results, they are not adequately incorporated into real-world solutions and decision-making. Each of these three areas can be broken up into underlying drivers, many of which fit into the broad categories of i. publishing, ii. funding and iii. culture.

Figure 1 shows the three main problem categories described in this post in the structure that builds on the idea of a problem tree. The main problem that is in focus (“Research is less effective in producing value than it could be”) is placed at the top and “root causes” of that problem are written out below, with arrows indicating causal relationships.

Figure 1. Inefficiencies in the research system are driven by poor choice and design of research questions, poor methodological quality and/or low reproducibility, and inadequate incorporation into real-world solutions and decision-making. Each of these three areas can be broken up into underlying drivers, many of which fit into the broad categories of i. publishing, ii. funding and iii. culture.

In the sections below, this problem tree will be expanded by focusing on one of these three issues at a time and exploring the underlying causes for why it occurs. Concepts that correspond to a box in the problem tree will be written in bold.

There are clearly also other ways in which resources are wasted within the research system that are not covered here (e.g. time spent on resubmitting proposals or papers without having received constructive feedback, or just poor time- and project management). To make this mapping a manageable task, I have opted to only include issues that could jeopardize the entire value of the research output, and not anything that only slows down progress of a given project (or makes it more expensive).

Finally, many of the identified root causes might not be consistently problematic: they might be an issue in one field but not in another, or it might be that some people see them as a problem while others see them as functional. I have chosen to include root causes where there seems to be either 1) good reason to believe that they are a significant driver of the problem they are pointing to, or 2) there seems to be a widespread notion of them as a significant driver. The reasoning behind this is on the one hand that it would be very difficult to consistently judge and rank the magnitude of different drivers, and on the other hand that just eliminating drivers that I don’t believe are significant could make the mapping appear spotty. As an example, I might not think that restricted access is among the most important problems in science, but excluding it from the mapping would seem weird since so much of the attention in metascience is focused on open access solutions.

- Poor choice of research questions

The choice and design of research questions for a project are fundamental for the potential value of the results. Many times it will be a matter of values, priorities, and ideology to determine what constitutes a good research question: one person might find it extremely important to investigate how the well-being of a cat is affected when it is left alone during the day, while someone else would think this is a terrible waste of resources. Other times a research question may be flawed in ways where it does not fulfill the intentions of either the entity that funded the research or the needs of the intended target group for the results.

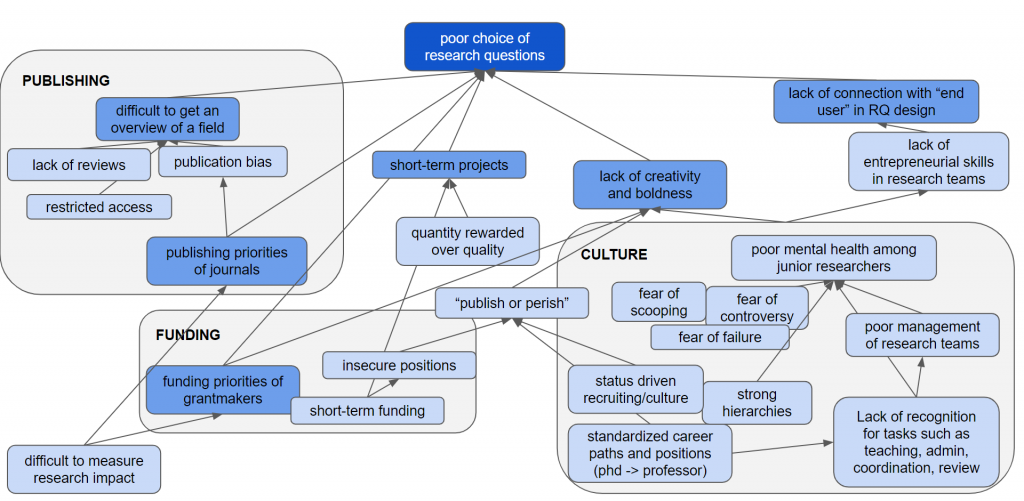

Figure 2 presents a mapping of underlying drivers for poor choice of research questions. The direct causes that have been identified are the difficulties with getting an overview of a field, the publishing priorities of journals, the funding priorities of grantmakers, short-term projects, lack of creativity and boldness and a lack of connection with the “end user” in the design of research questions. All of these have additional underlying drivers. Some are also interlinked with each other. As an example, the arrows illustrate how publishing priorities of journals is a cause for publication bias which in turn is a cause of that it is difficult to get an overview of a field.

Note that this kind of problem mapping can be used to quickly construct a theory of change for an intervention targeting one of the root causes; improving the publishing priorities of journals could reduce publication bias and make it more manageable to get an overview of a field, which could lead to better choice of research questions (and more effective value production of research, if we take it all the way to Figure 1).

2.1 Difficulties in getting an overview of the field

One of the reasons why research questions might be poorly chosen can be that it’s difficult for the researcher to get a good overview of the field, identify the relevant unknowns, and have a clear picture of the state of the evidence. Partly, this might be an unavoidable consequence of a rich and intricate research field with a long history. It may take a lot of time to go through previous work, and to understand the underlying building blocks and tools supporting later work. Nonetheless, there are a few factors that make the situation worse than it would have to be.

One important factor is publication bias. This is the tendency to let the outcome of a research study influence the decision whether to publish it or not. Publication bias influences both what researchers choose to submit for publication and what the journals choose to publish. Generally speaking, a study is much more likely to be published if a statistically significant result was found or if an experiment was perceived as “successful” in some sense. This may give the impression that a specific hypothesis has never been tested, when in fact it has been tested once or more but the results were not deemed publication-worthy. The tests may have proved inconclusive, perhaps because of methodological barriers (mistakes that may be repeated in future work) or extremely noisy processes. Even with sound methodology and strong statistical power the result might simply be deemed as too ‘boring’ for publication.

Another hindering factor is the lack of good review articles. Review articles survey and summarize previously published studies in a specific field, making them an invaluable resource to researchers. However, reviews are not always done in a systematic and transparently reported way, which can lead to a misleading impression of the current knowledge. It also happens that both previous research and reviews are ignored when designing research questions.

A third factor could be the issue that much research is published with restricted access. This makes it very expensive to legitimately access research papers for someone who does not belong to a university that pays subscriptions. However, there are library websites, SciHub (papers) and Libgen (books), that provide free access to millions of research papers and books without regard for copyright. This is not an optimal solution and there are issues with the site being blocked in many countries, but SciHub makes restricted access less of a practical problem than it would be otherwise.

2.2 Publishing priorities of journals

Since publishing research (preferably in the most high-status journals) is so important to a researcher’s career, the publishing priorities of scientific journals have a great influence on the choice of research questions. Researchers tend to pick and design research questions based on what they believe can yield publishable results, and this might not align with which questions are most important or valuable to study.

Journals generally favour novel, significant and positive results. From one perspective, this makes a lot of sense. These kinds of results seem likely to have the most value or impact, either for the research field or for society at large. However, if these are the only results that can be published in respected journals, it can make researchers hesitant to choose more “risky” research questions, even if they would be important.

A specific type of studies that can be hard to publish are replication studies, where the aim is to replicate the results of a previously conducted study in order to verify that the results are valid. One could argue that this type of research is less valuable than novel studies of high quality, but there are instances where replication studies might be very important. For example, if the results are to be implemented on a very large and expensive scale so that it is especially important to verify their validity. It can also be the case that the first study done on an important question has significant flaws or that there is suspicion of bias or irregularities.

So, why do the publication priorities of journals not align with what is best for value creation? That question in itself could probably be the basis for a separate article, but I believe one reason might be that it’s really hard to measure the impact of research. If the impact or societal value of research were easier to measure and demonstrate, it seems likely that at least some journals would use this to guide their publication priorities.

Scientific publishing can be a very profitable business. There is a lot of criticism towards publishers regarding both their business models and the incentives they create for science, as shown for example in this article covering the historical background of large science journals.

2.3 Funding priorities of grantmakers

Researchers depend on funding to carry out their work. Just as the choice of research question is influenced by what could generate publishable results, it is also directly influenced by what type of research questions attract funding. To be clear, funding priorities vary between different grantmakers and this is not necessarily a problem.

Public opinion influences research funding, both through political decisions that influence governmental funding and through independent grantmakers that fundraise from private donations. Detailed decisions about what to fund are generally done by academic experts, but high-level priorities about funding for example clean energy research can be directly influenced by political decisions.

When a specific field gets a lot of hype, this can influence the direction of funding a lot. Such trends often result in researchers tweaking their applications to include concepts that are likely to attract funding (AI, machine-learning, nanotechnology, cancer…), even though that might not be the best choice from a perspective of picking the most important or valuable research question. Hype of an applied field can also incentivize researchers to communicate their research in a way that makes it seem more relevant to those applications than it really is. An example of this could be basic research biologists spinning their work so it seems like it will have important biomedical outcomes.

An interesting feature, especially considering that journals often value novelty very highly, is that grant proposals are less likely to get funding if the degree of novelty is high. The observed pattern does not seem to be explained by novel proposals being of lesser quality or feasibility.

Many funders have a stated goal of achieving impact with their funding, but as noted in the previous section it is really hard to measure the impact of research (almost no matter what type of impact it is you try to optimize for). Also, many funders have specific fields that they focus on, e.g. cancer research, so that even if they attempt to optimize for impact they can only do that within that specific field.

One specific challenge might be that working with research funding is generally not a high-status job. This means it can be difficult to attract highly skilled people, even though doing the job well is indeed very difficult. Since there are no clear feedback loops on how well different funding agencies succeed in spending their money on impactful research, there is not much incentive for improvements.

2.4 Short-term projects

Many research questions are designed with the consideration that they should be possible to answer in a relatively short study, perhaps in 1-3 years. This can be a problem when a short study is not enough to answer an important question. For a study of a healthcare intervention, for example, we might only know the impact after 6 months, when in reality it might be more relevant to know the impact after 10 years.

One reason for this can be short-term funding, with small grants that only cover a couple of years. Also, there is a tendency that quantity is rewarded over quality so that researchers are rewarded more (career-wise) for having published a lot of small studies than a few large studies.

However, shorter grants can in fact often be combined to finance long-term projects. There are also large funders that work with large, long-term grants that are more directed to funding excellent researchers rather than specific projects. For specific cases this seems to have generated great results. Still it seems unclear if this would be a scalable solution to improve research effectiveness more generally.

2.5 Lack of creativity and boldness

When choosing and designing research questions, some choices are going to be riskier than others. A safe choice would be to go for research questions that lead the researcher into a field with a lot of funding (e.g. cancer research), while the study itself is something that is almost sure to generate publishable results.

A lot of important research might be risky. It might be in a novel or neglected research field where funding is more scarce or where there are fewer career opportunities, and it might be very unclear initially if the study will generate publishable results. To design and pursue such novel and high-risk research questions requires creativity and boldness. Meanwhile, there are many factors in the academic system that work against having bold and creative researchers.

A concept familiar to anyone in research is “publish or perish” – the idea that unless you keep publishing regularly in scientific journals your research career will quickly fail. Since publications are the output of science, it might seem obvious that productive researchers should be rewarded, However, the problem arises when a researcher has a better likelihood of career success from producing multiple low-quality papers on unimportant topics, than from doing high-quality work on important questions that generate fewer publications.

The “publish or perish” pressure is driven by several factors: it links to short-term funding and insecure positions, but also to status driven recruiting and culture.

A lack of creativity and boldness is also driven by a lot of interlinked factors of the academic culture: fear of failure and controversy, often poor mental health among junior researchers, and a hierarchical system where it could be risky for your career to stand out in the wrong way. The careers of researchers are generally set in very standardized career paths, where every researcher is supposed to advance in the same manner from PhD student to professor. This is clearly not a realistic expectation, considering that there are vastly more PhD students than professors.

The funding priorities of grantmakers also appear to play a role in holding back creativity and boldness, with funding agencies becoming more risk averse.

2.6 Lack of connection with end-user in the design of research question

Poor design of research questions in applied research is often linked to little or no contact with the intended end-user of the results. For example, a researcher who develops a new medical treatment might not have had much contact with the patients to understand if it would really improve their life quality. A researcher on economic policy might not have understood the considerations of the political decision-maker that would implement a new policy, and a materials researcher may have neglected important priorities in the industry that would use a new material. It seems common that researchers believe that they know the needs of their target groups without having actually asked them, which leads to research questions being framed in a way that does not yield useful results.

One reason for this disconnect could be a lack of entrepreneurial skills in research groups – it is uncommon to reach out to the end users and make new connections outside academia. Researchers often get their understanding of the field through scientific conferences and through what is published in journals, neglecting informal non-academic sources of information. It might also be difficult to communicate with non-experts or with experts of other fields if they are used to speaking in very specialized terminology.

It seems that entrepreneurial skills are not encouraged by the academic culture and system. The fact that career paths are standardized and hierarchies are often very strong could deter entrepreneurial people from an academic career.

Many grant-makers do request end-user participation in applied research projects, but in practice this is often established in a shallow way just to tick the box in the grant application.

- Poor quality and reproducibility

If the research question is “good” in the sense that answering it would provide value of some kind for society, the value of the output still depends on the quality of the research. The Center for Open Science does a lot of work in this area, and many metascience publications have been done on this topic.

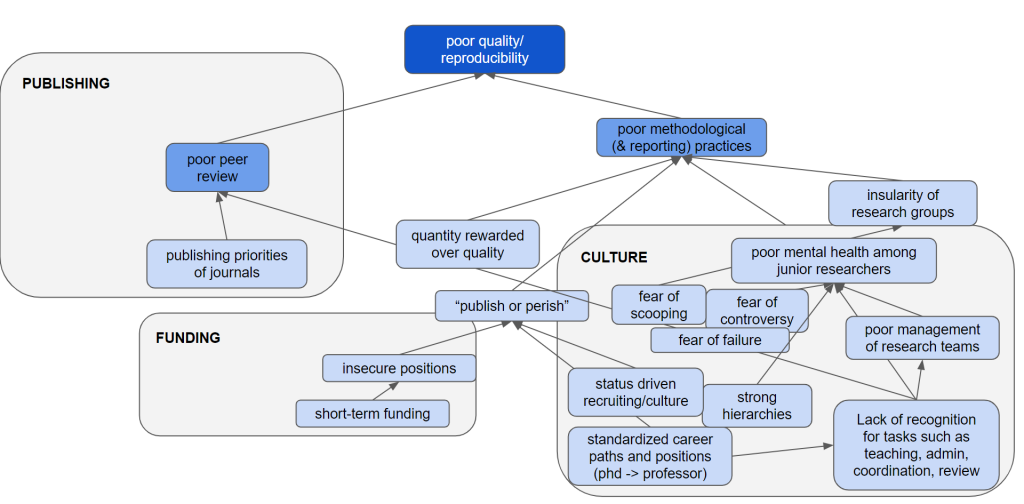

The reproducibility crisis (or replication crisis) refers to the realization that many scientific studies are difficult or impossible to replicate or reproduce. This means that the value of published research results is questionable. Figure 3 maps underlying drivers of poor research quality and reproducibility. The direct causes that have been identified are poor peer review as well as the poor methodological and reporting practices themselves. These practices are driven by many root causes, primarily linked to academic culture.

3.1 Poor peer review

Peer review is the process by which experts in the field review scientific publications before they are published. In theory, this system is supposed to guarantee quality. If there are flaws in the study design, the methodology, or in the reporting of results, the reviewers should see this and either make the author improve their work or reject the paper completely.

In practice though, this doesn’t work very well, and even top scientific journals have run seriously flawed papers. One reason for this is that even though reviewing is viewed as being important for academic career progression, it is done on a volunteer basis without direct compensation to the reviewer. Reviews are usually anonymous, so there is no accountability, and no real incentive to do peer review well. This leads to reviews being done either hastily or delegated to more junior researchers that might not have the experience to do it well.

High scientific quality should be among the top publishing priorities of journals, but it seems as if in practice that is not the case. If large, profitable and well-respected journals had scientific quality as a top priority, they should be able to achieve it. In reality, it appears that top science journals might even be attracting low-quality science, partly because they prioritize publishing spectacular results. Since getting published in a top journal can be extremely valuable to a researcher’s career, there is a great incentive to cut corners or even cheat to achieve such spectacular results.

3.2 Poor methodological and reporting practices

Apart from flaws in peer review, the main driver for poor research quality and reproducibility is poor methodological and reporting practices. Poor methodology refers to when the study and/or data analysis is poorly done. Of course, there are many field-specific examples of poor methodology, but there are also questionable research practices that occur in many different fields of research.

One prominent example is p-hacking (or “data dredging”), which describes when exploratory, or hypothesis-generating, research is not kept apart from confirmatory, or hypothesis-testing research. This means that the same data set that gave rise to a specific hypothesis is also used to confirm it. P-hacking can lead to seemingly significant patterns or correlations in cases where in fact, no such pattern or correlation exists.

Poor reporting overlaps with poor methodology, but can also be a separate issue where the actual method is scientifically sound. If for example details of experiment design, raw data or code for data analysis is not shared publicly, it is very difficult for someone else to independently repeat a study to confirm the results. In theory such data could be obtained from the corresponding author, but in practice records are often so poor that more than 70% of researchers have tried and failed to reproduce another scientist’s experiments, and more than half have failed to reproduce their own experiments..

Methodological and reporting practices are linked to the academic culture. Methodology and routines for documentation and reporting are often taught informally within research groups. Informal training combined with the insularity of research groups, where methodological practices are not shared and discussed between different groups, leads to lack of transparency. Strong hierarchies also contribute to lack of scrutiny as it can be hard for junior researchers to challenge the judgement of their supervisors.

Finally, there is the issue of quantity over quality. The pressure to publish (“publish or perish”) pushes researchers to submit flawed studies for publication, as there is a widespread perception that quantity is rewarded over quality in a researcher’s career. There are a number of declarations, manifestos, and groups, claiming that the metrics we are currently using –which focus on quantity– are flawed with (potentially) catastrophic consequences.

Meanwhile, a study made on Swedish researchers states that such criticism lacks empirical support. A full evaluation of this is outside the scope of this post, however it might be worth reflecting on Goodhart’s Law: “When a measure becomes a target, it ceases to be a good measure”. In other words: Evaluating the quality of scientists based on the amount of their publications, would only lead to scientists who produce a lot of publications. And in order to produce studies faster, researchers would neglect the “boring things”: documentation, organization of research artifacts, and might also end up with flawed methodologies.

- Results are not used in the real world

Even when research produces valuable results, their actual impact will often depend on their implementation outside of academia. In some cases (e.g. basic research) this might be less relevant as the end users are also in academia. Thus impact will be generated through the accumulated work of other researchers that builds further on these results.

However, for most applied research, the results have essentially no impact unless they are used “in the real world” outside academia. Use of results looks very different for different fields and involves different types of end-users: it could be a change of laws or policy that is implemented by politicians and public servants, or the use of a new material that is used to produce better consumer electronics by a private company, or the implementation of better infection prevention methods in hospitals by healthcare staff. Neither of these are likely to happen as a direct result of a researcher publishing a paper in a scientific journal unless they also involve other forms of communication and collaboration.

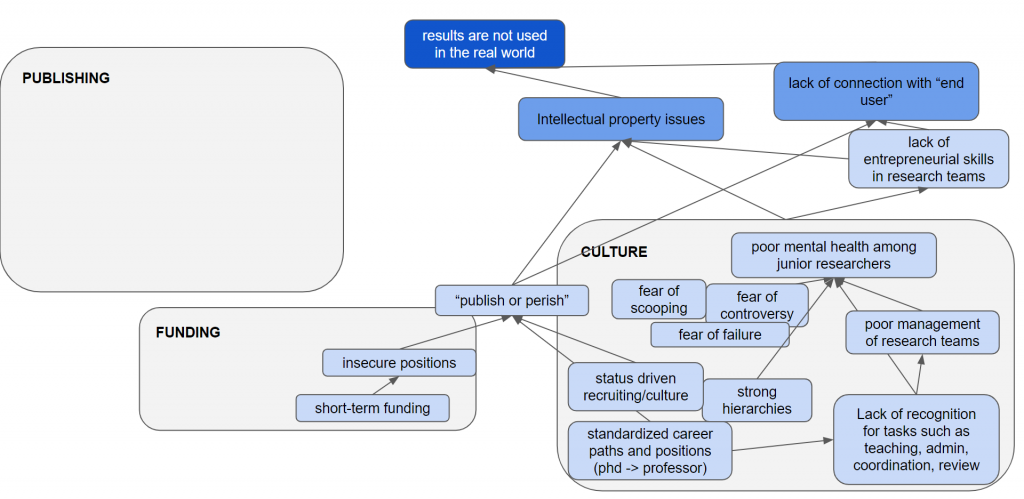

Figure 4 maps underlying reasons for why research results are not put to use. The direct causes that have been identified are intellectual property issues and lack of connection with the end user. The underlying causes seem to mostly depend on problems with the academic culture.

4.1 Intellectual property issues

For research that results in Intellectual Property (IP) — typically an invention that can be protected by a patent — the handling of the IP rights is extremely important for how likely the results are to be used. The ownership of the IP generated by research works in different ways in different locations. In Sweden and Italy, for example, the researcher has full personal ownership of any IP their research generates through the so-called “professor’s privilege”. In other locations, for example in the US and in the UK, IP rights or results of publicly-funded research are owned by the institution where the researcher works (institutional ownership). There also exist more complicated variations where IP ownership is split between the researcher and the institution.

Protecting IP means that nobody else can use the invention without permission, and it is a common perception that a patent is most of all a barrier to implementation. The practice of patenting discoveries, especially those that come as a result of publicly or philanthropically funded research, has been heavily criticised for creating barriers to innovation and collaboration, being a bureaucratic and administrative burden for universities creating distorted incentives for researchers. For example, researchers may be deterred from investigating the potential of a compound that is patented by somebody else as they know they will be dependent on the patent holder for permission to use any new results.

On the other hand, IP protection can be crucial to attract investments to take an invention all the way to practical use. Say, for example, that a research group has identified a compound that could be a very promising new medication. It is generally only if the IP is protected that investments will be made to develop this promising compound into a drug. The process of developing and commercialising a new product is expensive and risky, and patent ownership is what makes it possible to recoup these investments if the project is successful. This means that if the group does not patent the compound (or otherwise protect the IP), it will never be developed into a drug.

One way that IP issues can prevent results from being used is therefore simply sloppiness, when nobody realized there was IP that should be protected, or nobody bothered to do it. A patent can only be granted for a novel and unknown invention, if the IP has ever been described in public it can not be protected. The possibilities to get investments into commercial development then become very slim even for promising inventions. Protection of intellectual property can thereby be in direct conflict with the focus on rapid publication of results (the “publish or perish” culture).

On the other hand, filling and maintaining patents is no guarantee for implementation. A lot depends on the patent owner, and it’s unclear what policies generate the best results. When the individual researcher holds the patent personally, a lot depends on that researcher’s skills and motivations. If they don’t have the interest or the ability to commercialize the invention, it will generally never happen. The academic culture is not encouraging entrepreneurship, and many research groups lack members with entrepreneurial skills.

When patents are instead owned and managed by the university through some kind of venture or holding company, a little less depends on the individual researcher. However, this leads to other issues: the staff managing IP exploitation do not have a personal stake in the potential startup, which decreases their incentives to do a great job. Also, since they manage a portfolio of patents, different patents generated from the same university compete with each other for resources and priority.

4.2 Lack of connection with end-user

It seems common that a researcher has the view that their job is done by producing and publishing results in scientific journals, which is logical since that is how a research career is measured and rewarded. For a result to be used in the real world, however, publishing is rarely enough.

Section 2.6 covers the lack of connection with end-users in the design of research questions. The most serious consequence of this is when the research question itself is fundamentally irrelevant for the stakeholders. When there is no direct contact between researcher and end-user, the researcher also does not get any clear feedback on why the results remain unused which makes it difficult to improve.

Contact with the end-user is crucial also after the results have been obtained. Working with implementation is time-consuming,difficult, and often not rewarded by the academic system. Implementation work can look very different between fields – in political science or economy, the stakeholders could be politicians or staff at government bodies, while in biotechnology or physics it might be private companies. Either way, close collaboration between researcher and end-user is often a prerequisite for proper implementation.

- Conclusions

The academic research system is very complex, and there are many different issues that cause waste of resources within it. Though research produces a lot of value of different kinds – e.g. improved medical treatments, more efficient food production, sustainable energy technologies, or improved understanding of the universe – there also seems to be a lot of room for improvement.

An issue that came up in the process of creating this piece but that was not included in the mapping is a general scarcity of flexible research funding. Increased (and less restricted) funding might be a possible solution to some of the problems mentioned here. Still, it’s unclear if it would be more effective to increase funding or to address other problems in the system.

As mentioned in the introduction, to make this writeup manageable I opted not to include issues that seem to just slow progress towards results without jeopardizing their value. Such issues would include requirements for researchers to spend their time on other things than research, and I think a discussion on the subject of what researchers should and should not spend time on would fit better in a separate post.

Proposed solutions and reform initiatives are also outside the scope of this post, but it’s worth mentioning that a lot of work is being done by different organizations.

During the next year I plan to work further on understanding and describing challenges as well as solutions and reforms, with the ultimate aim of figuring out what could be effective ways to contribute to the improvement of scientific research.